by Jeffrey Barrett

A central theme of the exhibition Future Tense: Art, Complexity, and Uncertainty is the capacity of complex systems to self-assemble ordered structure. A notable example of this is when meaning emerges from randomness. The meaning may be expressed in the intentions of an artist or the interpretations of a viewer experiencing the artist’s work where both the artist and viewer are understood as complex systems interacting with each other and the world. In order to understand better how meaning might arise from random interactions, we will focus on two simple models that illustrate the emergence of meaningful language—a signaling game and a coding game where randomness leads to successful communication.

The effects of randomness are manifest in the behavior of complex systems. We see them in the shape of cracks in the sidewalk, the behavior of markets, the flow of history, the genetic mutations that cause cancer, and the acts of friends.

Our best physical theories aim to characterize the sort of randomness we find in nature and how it behaves. The second law of thermodynamics describes the effects of the random motions of molecules. It says that entropy, a measure of microscopic randomness, tends to increase with time. A quick example illustrates the type of phenomena the law describes.

Consider an intricate ice sculpture on the beach on a hot day. It might start depicting a bouquet of flowers with intricately carved stems and petals. But as the molecules in the surrounding air randomly bounce against the sculpture, the ice is warmed, and it begins to melt. As it melts, the representational information encoded in its structure is irretrievably lost. Someone looking at the patch of wet sand an hour later will be unable to tell what the ice flowers looked like or even that there was once a sculpture. The process that destroys macroscopic information through random microscopic interactions is itself statistically inevitable.

The gradual loss of representational structure, of information, ultimately applies to all we create. Nearly all that the ancient Greeks did, said, and made is forever lost. And such processes apply to us as complex biological systems. As the Book of Common Prayer instructs the congregation on Ash Wednesday, “Remember that you are dust, and to dust you shall return.”

But randomness also plays an essential role in creation. Meaningful order can emerge from random interactions in the context of an adaptive dynamics, an evolutionary process with positive feedback. When this sort of dynamics acts on a system, it may adapt the system to a pattern or purpose in surprising ways.

An example of order from randomness is the evolution of life itself, but a simpler example is seen in the spontaneous formation of a crystal. The crystal forms with the appearance of a random seed that provides an initial scaffolding. The seed might be an impurity in the medium in which the crystal forms or a scratch in the container holding the solution. The elements of the crystal then begin to self-organize by means of random local interactions that settle the molecules into positions of minimum energy. This process tends to build and complete sequential layers of elements. And a highly ordered crystalline lattice thereby emerges.

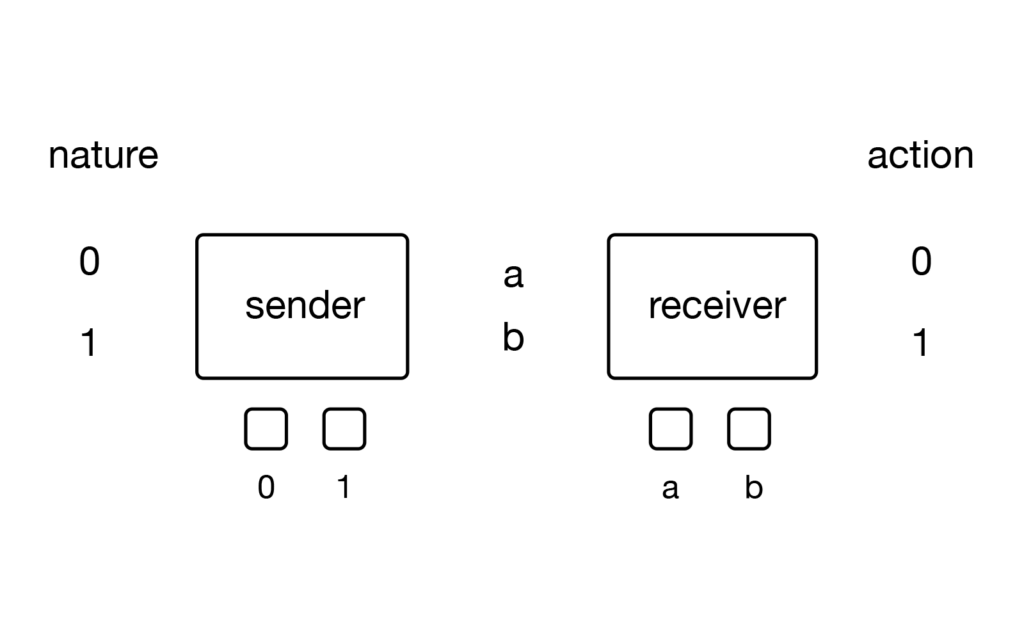

A similarly simple evolutionary process can explain the emergence of meaningful signals in the context of a signaling game. The philosopher David Lewis used signaling games to explain how linguistic conventions might be established without the aid of prior linguistic conventions.1 Brian Skyrms, professor of Logic and Philosophy of Science at UC Irvine, later generalized Lewis’s framework using evolutionary signaling games to show how meaningful language might emerge from random interactions.2 The simplest sort of signaling game involves two players: a sender and a receiver as in figure 1. The sender can see the state of nature and send a signal, but she cannot act. The receiver cannot see the state of nature, but he can see the signal and can act in a way that might benefit both agents depending on the current state of nature. We will suppose that each player learns by simple reinforcement. Simple reinforcement plays the role of an adaptive dynamics in the evolutionary game. How it works can be represented in terms of drawing balls from urns and adding new balls when the agents’ actions are successful.

Figure 1: A Signaling Game. On each play, the state of nature is randomly determined: either 0 or 1. The sender (large box on the left) observes nature, draws a signal, a or b, from her corresponding urn (the two small boxes below her), and sends it. The receiver (large box on the right) sees the signal, draws an action, 0 or 1, from his corresponding urn (the two small boxes below him), and performs it. The receiver’s action is successful if and only if it matches the current state of nature.

On each play of the signaling game, the current state of nature is determined in a random and unbiased way by the toss of a fair coin. The sender has two urns, one for each possible state (0 and 1), and each of these urns starts with two balls, one for each possible signal (a and b). To determine her signal, she observes the state of nature (whether 0 or 1) then draws a signal ball at random from the corresponding urn. Then she sends that signal. She may send a or may send b on a play. Initially, the probability of each is equal as there is one ball of each type in the urn. The receiver has one urn for each possible signal (a and b), and each of these urns starts with one ball for each possible act (0 and 1). The receiver sees the sender’s signal then draws an act ball at random from the corresponding urn. Then he performs the act, whether 0 or 1. The sender and receiver are successful if and only if the receiver’s act matches the current state of nature. In the case of success, each player returns their ball to the urn from which it was drawn and adds a ball of the same type to the urn, thus increasing the probability of this ball being picked again; otherwise, each simply replaces the ball she drew.

This simple type of trial-and-error reinforcement learning is found throughout nature.3 A puppy who finds a scrap of salmon under a picnic table at the park will be more likely to look under that table on future walks. A commuter who gets home easier driving a new route will be more likely to use the new route on future commutes. When an agent learns by simple reinforcement, she may not even reflect on her experience as her dispositions slowly adapt to the world by trial and error. We are using balls and urns to model an adaptive dynamics where the probability of an action increases when the state where that action was successful occurs again.

Consider a concrete example. Suppose state 0 is “it’s sunny,” state 1 is “it’s raining,” signal a is “flash a green light,” signal b is “flash a blue light,” action 0 is “take two straw hats to the picnic” (the right thing to do on a sunny day) and action 1 is “take two umbrellas to the picnic” (the right thing to do on a rainy day). Here the sender wants to send a signal that communicates the weather to the receiver, and the receiver wants to take straw hats if it is sunny and umbrellas if it is raining. But the green and blue signals are meaningless, so there is initially no way to communicate.

On each day it is either sunny or rainy at random and with equal probability. Suppose it is sunny on the first day. The sender sees the sun and draws a ball at random from her corresponding 0 urn. Suppose that she draws a b ball and so flashes a blue light. The receiver sees the flash and draws a ball at random from his corresponding b urn. Suppose he draws a 0 ball, so he takes straw hats to the picnic, the right thing to do on a sunny day. This was a lucky draw since he might have drawn a 1 ball and taken umbrellas. But since he took straw hats on a sunny day, the play is successful, and each player returns the ball she drew to the urn from which it was drawn and adds another ball of the same type to that urn. This makes it more likely in the future that if the sender sees that it is sunny, then she will flash a blue light and more likely that if the receiver sees a blue flash, then he will take straw hats to the picnic. Had the receiver brought umbrellas, the play would have been unsuccessful, and each player would just return their ball to the urn from which it was drawn. In that case, the probabilities of signals and actions would remain the same as they were at the beginning of the day.

It is easy to write a computer program that models two players repeatedly playing this game. On simulation, the sender and receiver initially signal and act at random since each urn starts with one ball of each type and their draws are random. As a result, their signals are initially meaningless. But sometimes the receiver’s random act, by chance, matches the current state and the play is successful. As the players learn by simple reinforcement on what they did when they were successful, their signals gradually evolve meanings that allow the sender to communicate the state of nature reliably and the receiver to do the right thing. Argiento, Pemantle, Skyrms, and Volkov have proven that a perfect signaling language will almost surely eventually emerge in this very simple signaling game.4 And when we run this on a computer, the players typically evolve a reliable signaling language in a few dozen plays.

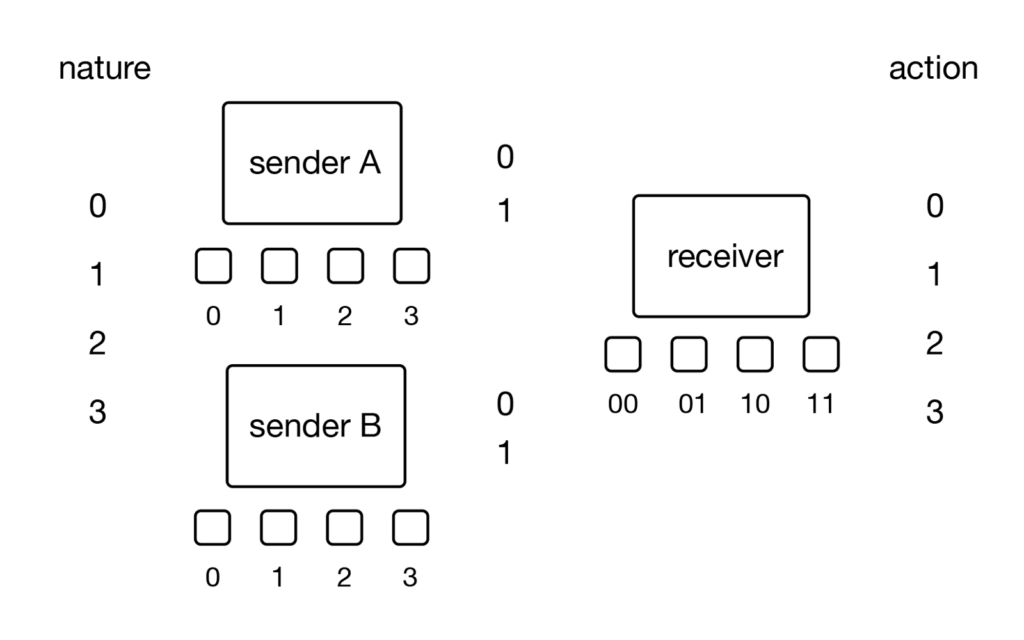

In this simple game, meaningful order emerges from random trial-and-error learning as the behavior of the two players evolves, and each signal ends up communicating precisely one bit of information. Of course, there is nothing in the system that determines up front what the meaning of each signal will be. Their meanings are created by the players as they play. They are the product of random events in the context of the adaptive learning dynamics. A more complicated game illustrates a more subtle type of emergence.5 The coding game involves two senders and one receiver as in figure 2. At the start of each play, nature chooses one of four states, each with equal probability. The two senders observe the state, then each randomly draws a 0 or 1 signal ball from her corresponding urn. The receiver observes both signals and who sent them then draws an act ball from his corresponding urn and performs the action.

Figure 2: The Coding Game. At the start of each play, nature takes one of four states at random. Each of the two senders sees that state then chooses a signal of 0 or 1 from her corresponding urn (the four small boxes below each sender). The receiver sees both signals and draws an action from his corresponding urn (the four small boxes labeled with the two signals he might see). The receiver’s action is successful if and only if it matches the current state of nature.

As in the simple signaling game we considered earlier, a play here is successful if and only if the receiver’s action matches the current state. If so, each player returns their ball to the urn from which it was drawn and adds a ball of the same type; otherwise, each player just returns their ball to the urn from which it was drawn.

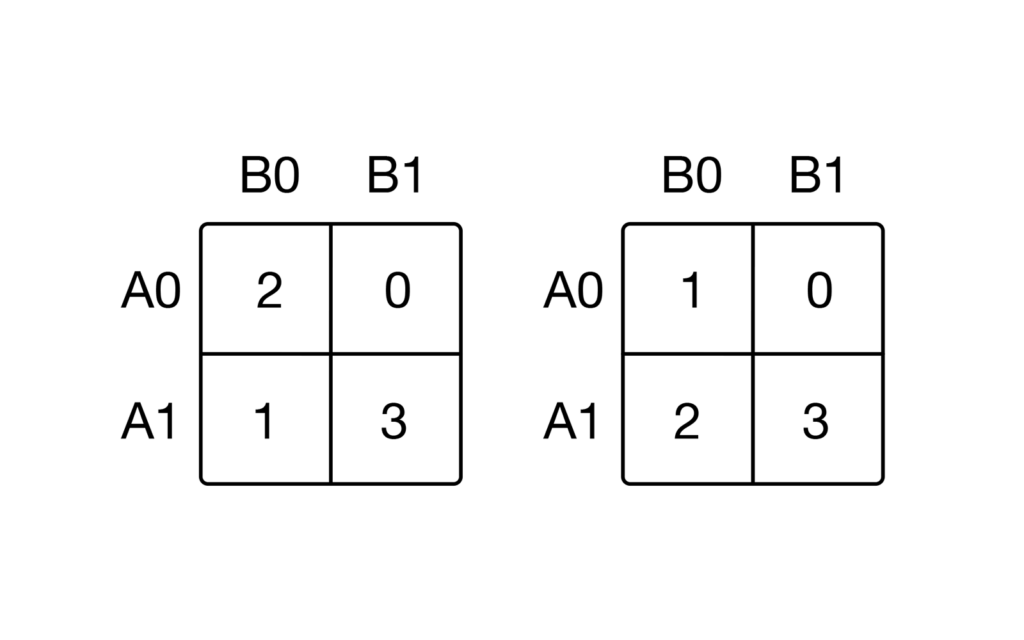

In the coding game, neither sender has the resources required to communicate the state on her own. In order to evolve an optimal language, they must learn to partition nature in a systematic way so that the two signals together fully express which of the four states of nature the sender observes. One way that this might happen is if sender A learns to distinguish between nature being either state 0 or 2 (and sends a 0 if this is true) or state 1 or 3 (and sends a 1 if this is true) and sender B learns to distinguish between nature being either state 1 or 2 (and sends a 0 if this is true) or state 0 or 3 (and sends a 1 if this is true). In this case, 00 would mean state 2, 01 would mean state 0, 10 would mean state 1, and 11 would mean state 3 (see the left hand side of figure 3). This requires each sender to learn to attend to the conventional properties they have jointly invented as they play. When successful, they create and learn to use a systematic code to represent each of the four possible states and actions.

On simulation, the players in the coding game always evolve a meaningful signaling language, but an optimal language evolves in this game only about 73% of the time. When the players are successful in evolving an optimal language, random trial-and-error learning has led to a perfect system for communicating the information required for successful coordinated action. But again, this can only happen when the players create systematically interrelated properties and then attend to whether not each property holds. It is impossible to predict which properties the players will create on a run. Figure 3 shows the properties the players created and learned to track on two different runs. On the first run (on the left), A sends 0 and B sends 0 to represent state 2. But on the second run (on the right), A sends 1 and B sends 0 to represent state 2. The adaptive dynamics allows the players to evolve an optimal language on each run, but the inherent randomness in the dynamics leads to different languages on different runs.

Figure 3: Two codes. The left and right matrices represent perfectly efficient codes the sender and receiver might spontaneously invent on a run of the coding game. The code that emerges in play is randomly determined as the agents learn by trial-and-error reinforcement.

As with the simple signaling game, the coding game illustrates how a meaningful language may emerge in the context of a random, trial-and-error adaptive dynamics. In both games, meaning is created as the players interact with each other and the world. But the coding game also requires the agents to learn to attend to their created properties in the world.

The evolution of attention is the key idea behind large language models like ChatGPT and Claude. The transformer architecture of such models was invented and first described in a paper by a group of Google scientists entitled “Attention is All You Need,” a play on the Beatles’ song “All You Need is Love.”6

A large language model (LLM) is a network with ordered layers of nodes connected by weighted edges. One might picture the network as a set of dots (nodes) and lines (edges) that connect nodes on one layer to nodes on the next layer. Every node is connected to at least one other, and each edge is assigned a numbered weight between 0 and 1 — the larger the number, the stronger the effect of one node on the nodes it is connected to on the next layer. When an input is presented to the input nodes of a trained model, the network produces a random result in the output nodes with probabilities that depend on the weights associated with the edges in the network. The weights are determined by how the model was trained. An LLM is trained using a huge collection of data, a standard of success, and an adaptive dynamics that gradually fits the behavior of the network to the standard of success by a trial-and-error learning process.

As a transformer model is trained, the features of the input to which the model attends and how it behaves when it observes these features, both of which depend on edge weights, are gradually tuned by the adaptive dynamics to fit the actual output of the model to the best possible output given the user-specified standard of success. In this way, as the system evolves, it learns both what to attend to and how to act on what it finds. Later, when it is presented with something it has never seen, it looks in places that have mattered for successful action in the past, then it uses what it sees to produce an output.

One can assess how well a transformer model learns saliences and connections by probing it with inputs that are unlikely to have been used in its training data. Consider the following prompt and Anthropic Claude 3.5 Sonnet’s reply.

*

Prompt: Explain two connections between emergent meaning by means of a random adaptive dynamics and art, complexity, and uncertainty.

Reply: There are several interesting connections between emergent meaning through random adaptive dynamics and the concepts of art, complexity, and uncertainty. Here are two key connections to consider:

Unpredictability and creative emergence:

Both random adaptive dynamics and artistic processes often involve unpredictable outcomes emerging from complex interactions. In adaptive systems, meaning can emerge unexpectedly as elements interact and evolve over time without a predetermined plan. Similarly, in art, the creative process often involves experimentation and allowing unexpected results to emerge. Artists may start with an initial idea but then respond intuitively to how their work develops, embracing uncertainty and complexity. The final artistic product often has emergent qualities that weren’t fully anticipated at the outset. This parallel highlights how both domains harness uncertainty and complexity as generative forces.

Pattern recognition and meaning-making:

Adaptive systems and artistic interpretation both rely heavily on pattern recognition to derive meaning from complexity. In random adaptive dynamics, meaningful structures or behaviors can self-organize out of initially disordered states as the system identifies and amplifies useful patterns. Likewise, both artists and viewers engage in pattern recognition to create and interpret meaning in artworks. Abstract art in particular often presents complex visual information that viewers must actively engage with to discern patterns and construct meaning. This process mirrors how meaning emerges from the complexity of adaptive systems, with the human mind acting as the pattern-recognition mechanism that extracts signal from noise.

*

The prompt asks Claude 3.5 for connections between the themes of the present exhibition and the related themes of this essay. While Claude likely never saw this prompt when it was trained, it does strikingly well in its reply. Strengthening Claude’s reply, I would argue that both artists and viewers are nothing but adaptive systems whose actions are forged by random adaptive processes; but given the inherent uncertainty in its audience, Claude shows wisdom in its more modest position.

Future AI systems will certainly do better, but that Claude has learned to associate a rich collection of topics with the present meager prompt and to thread them together to form cogent, and interesting positions is impressive. Its behavior illustrates how well a simple trial-and-error algorithm can learn to identify natural saliences and to find connections between them if presented with enough data.

While the details are a little different, the emergent behavior of an LLM like Claude is similar to that of the players in the coding game. Each system is initially capable of only random actions. The players in the coding game learn by trial and error to communicate using a meaningful language they gradually create for the purpose. Claude learns by trial and error to identify salient aspects of a prompt then learns patterns in these aspects and how to generate sensible responses. In each case, the adaptive system’s ability to identify saliences and to exploit them for successful action emerges from a trial-and-error adaptive dynamics as it interacts with the world.

Inventing languages, finding saliences, and learning connections involve creativity not unlike that exhibited by an artist. Each of the processes we have considered involves a random element. It is this that makes the process unpredictable. The players in the coding game must create conventions and learn to adhere to them in order to be successful. It is impossible to predict which conventions they will create. Claude must learn to reply to questions it has never been asked to be successful, and in doing so it may say things that have never been said. And, like the works in the exhibition, its creations may spark new insights.

Our capacities have evolved under trial-and-error natural selection and by means of trial-and-error learning. The inherent randomness of these processes is baked into our nature. It is this that endows us with the ability to create things that are novel and surprising. The present exhibition itself is the product of randomness in the context of an adaptive dynamics. Each artist’s work has been influenced by their noisy experience. The curator has selected works in ways that depend on his experience and interactions with the artists. The resulting collection has the capacity to inspire new insights and understanding in us. The artworks become a part of us, a part of how we act and understand.

Notes

- David Lewis, Convention (Harvard University Press, 2020).

- Brian Skyrms “Signals,” Philosophy of Science 75 (5) (2006): 489–500.

- See Richard J. Herrnstein, “On the Law of Effect,” Journal of the Experimental Analysis of Behavior 13 (1970): 243–266, for early work on reinforcement learning in birds. For an example of reinforcement learning in humans, see Alvin E. Roth and Ido Erev, “Learning in Extensive Form Games: Experimental Data and Simple Dynamical Models in the Immediate Term,” Games and Economic Behavior 8 (1995): 164–212.4.

- See Raffaele Argiento, Robin Pemantle, Brian Skyrms and Stanislav Volkov, “Learning to Signal: Analysis of a Micro-Level Reinforcement Model,” Stochastic Processes and Their Applications 119, issue 2 (2009): 373–390. The theorem only applies to this two-state, two-term, two-action signaling game with unbiased nature. Convergence is not guaranteed if there are more than two states and acts or if nature is biased. The coding game discussed below is an example of a game where convergence is uncertain.

- See Jeffrey A. Barrett, “Dynamic Partitioning and the Conventionality of Kinds,” Philosophy of Science 74 (2007): 527–546, for a discussion of this game.

- Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aiden N. Gomez, Łukasz Kaiser and Illia Polosukhin, “Attention is All you Need,” Advances in Neural Information Processing Systems 30 (2017), 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, accessed February 2, 2025.